Introduction:

Artificial Intelligence is a technological term which deals with machines demonstrating intelligence like humans. Artificial intelligence makes it possible for machines to learn from experience, adjust to new inputs and perform human-like tasks. Most common day to day examples of AI is voice assistants (Siri, Alexa), self-driving cars, text and other predictions, smart email filtering. etc. Most of the applications of AI are based on the principles of other technologies such as Machine learning, deep learning, natural language processing, Big Data etc. Though AI helps machines to think and act in an outperformed way than humans, machines always need humans to train them.

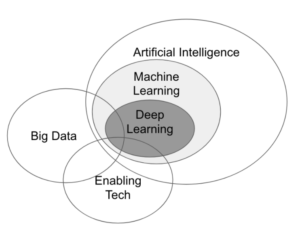

The below Venn diagram helps us understand the relation between AI and the other technologies which are a subset of AI.

Brief History: AI is a term which is more popular in the current times with the rise in volumes of data, evolution of new algorithms and increases in computing power, But it has been around for almost 60 years now. The term was first introduced in 1956 where the early research was focused on problem-solving, street mapping projects by Defense Advanced Research Projects Agency (DARPA) most of which lead to automated systems in the coming future.

AI is a term which is more popular in the current times with the rise in volumes of data, evolution of new algorithms and increases in computing power, But it has been around for almost 60 years now. The term was first introduced in 1956 where the early research was focused on problem-solving, street mapping projects by Defense Advanced Research Projects Agency (DARPA) most of which lead to automated systems in the coming future.

AI works by combining large amounts of data with fast, iterative processing and intelligent algorithms, allowing the software to learn automatically from patterns or features in the data. AI is a broad field of study that includes many theories, methods, and technologies, as well as the following major sub-fields:

- Machine Learning automates analytical model building. It uses methods from neural networks, statistics, operations research, and physics to find hidden insights in data without explicitly being programmed for where to look or what to conclude.

- A Neural Network is a type of machine learning that is made up of interconnected units (like neurons) that processes information by responding to external inputs, relaying information between each unit. The process requires multiple passes at the data to find connections and derive meaning from undefined data.

- Deep Learning uses huge neural networks with many layers of processing units, taking advantage of advances in computing power and improved training techniques to learn complex patterns in large amounts of data. Common applications include image and speech recognition.

- Cognitive Computing is a sub-field of AI that strives for a natural, human-like interaction with machines. Using AI and cognitive computing, the ultimate goal is for a machine to simulate human processes through the ability to interpret images and speech – and then speak coherently in response.

- Computer Vision relies on pattern recognition and deep learning to recognize what’s in a picture or video. When machines can process, analyze and understand images, they can capture images or videos in real time and interpret their surroundings.

- Natural Language Processing (NLP) is the ability of computers to analyze, understand and generate human language, including speech. The next stage of NLP is natural language interaction, which allows humans to communicate with computers using normal, everyday language to perform tasks.

In summary, the goal of AI is to provide software that can reason on input and explain on output. AI will provide human-like interactions with software and offer decision support for specific tasks, but it’s not a replacement for humans – and won’t be anytime soon.

Difference between Artificial Intelligence & Machine Learning

[embed][/embed]Why is Artificial Intelligence important?

- It automates repetitive learning and discovery through data. But AI is different from hardware-driven, robotic automation. Instead of automating manual tasks, AI performs frequent, high-volume, computerized tasks reliably and without fatigue. For this type of automation, the human inquiry is still essential to set up the system and ask the right questions.

- It adds intelligence to existing products. In most cases, AI will not be sold as an individual application. Rather, products you already use will be improved with AI capabilities, much like Siri was added as a feature to a new generation of Apple products. Automation, conversational platforms, bots and smart machines can be combined with large amounts of data to improve many technologies at home and in the workplace, from security intelligence to investment analysis.

- It adapts to progressive learning algorithms to let the data do the programming. AI finds structure and regularities in data so that the algorithm acquires a skill: The algorithm becomes a classifier or a predictor. So, just as the algorithm can teach itself how to play chess, it can teach itself what product to recommend next online. And the models adapt when given new data. Backpropagation is an AI technique that allows the model to adjust, through training and added data when the first answer is not quite right.

- It analyzes more and deeper data using neural networks that have many hidden layers. Building a fraud detection system with five hidden layers was almost impossible a few years ago. All that has changed with incredible computing power and big data. You need lots of data to train deep learning models because they learn directly from the data. The more data you can feed them, the more accurate they become.

- It achieves incredible accuracy though deep neural networks – which was previously impossible. For example, your interactions with Alexa, Google Search, and Google Photos are all based on deep learning – and they keep getting more accurate the more we use them. In the medical field, AI techniques from deep learning, image classification, and object recognition can now be used to find cancer on MRIs with the same accuracy as highly trained radiologists.

- It gets the most out of data. When algorithms are self-learning, the data itself can become intellectual property. The answers are in the data; you just have to apply AI to get them out. Since the role of the data is now more important than ever before, it can create a competitive advantage. If you have the best data in a competitive industry, even if everyone is applying similar techniques, the best data will win.

Currently, AI works by formulating tasks as prediction problems and then using statistical techniques and lots of data to make predictions. One simple example of a text-based prediction problem is auto-complete. In machine learning, prediction problems like this are called supervised learning.

Applications of AI: Today, AI has a rising demand in every industry where an interaction is involved. Some areas of its applications are:- Healthcare: AI Applications can be used to track medical reports and reading, personal healthcare assistants remind you to take your pills, exercise or eat healthier or can act as virtual coaches.

- Retail: AI provides virtual shopping capabilities that offer personalized recommendations and discuss purchase options with the consumer.

- Manufacturing: AI can analyze factory IoT data as it streams from connected equipment to forecast expected load and demand using recurrent networks, a specific type of deep learning network used with sequence data.

- Sports: AI is used to capture images of game play and provide coaches with reports on how to better organize the game, including optimizing field positions and sketch new strategies.

Top companies working on Artificial Intelligence:

Though AI has been implemented in many organizations and real-world scenarios it is still under development and many more techniques are being evolved. According to Forbes, Some top companies and organizations which are into the AI research are

- Deepmind

- Google Brain Team

- Open AI

- Baidu

- Microsoft Research

- Apple

- IBM

There are also some smaller startups which have been evolved in the field of AI. Such startups are acquisition targets for big tech companies and also for traditional insurance, retail, and healthcare incumbents. Most of them have been acquired by the technological giants to collaborate for a better research. Such acquisitions have been represented in the below image

Working together with Artificial Intelligence

- Artificial intelligence is not here to replace us. It augments our abilities and makes us better at what we do. Because AI algorithms learn differently than humans, they look at things differently. They can see relationships and patterns that escape us. This human, AI partnership offers many opportunities. It can:

- Bring analytics to industries and domains where it’s currently underutilized.

- Improve the performance of existing analytic technologies, like computer vision and time series analysis.

- Break down economic barriers, including language and translation barriers.

- Augment existing abilities and make us better at what we do.

- Give us better vision, better understanding, better memory and much more.

- Knowing how machine learning can be used to build predictive models for AI, Learning how software can be used to process, analyze, and extract meaning from natural language; and to process images and video to understand the real world applications and to build intelligent bots that enable conversational communication between humans and AI systems is needed to excel in AI.

Educational resources:

Now that we have an idea about what AI is, the next question is where do I learn more about AI. That’s pretty much easy. Though there are many books and resources on the internet regarding AI and its components. I personally recommend these courses from top university professors to have a better understanding of the same.

- Artificial Intelligence: A Modern Approach - Peter Norvig

- Artificial Intelligence for Humans - by Jeff Heaton

- Online Resources

Future Trends - Top Artificial Intelligence Forecasts by 2025

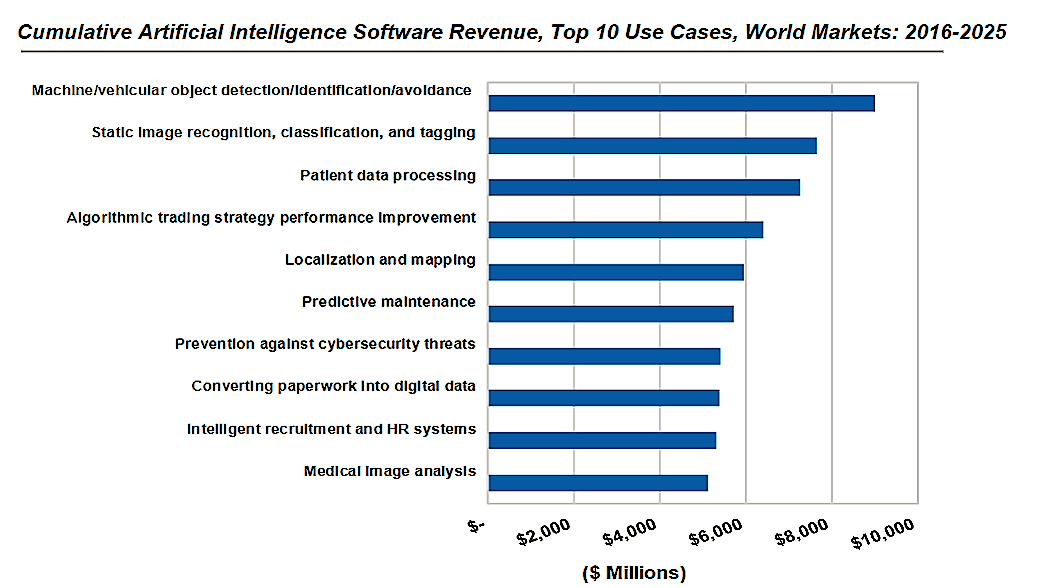

The combination of image/vision related AI Applications will represent 30% of the total Artificial Intelligence Market by 2025 (Artificial Intelligence Market Size Projected To Be $60 Billion by 2025) according to Tractica recent study. The applications they considered with the most AI Market potential in revenue terms for the coming years are those aimed to:

Sources: https://www.sas.com/en_us/insights/analytics/what-is-artificial-intelligence.html https://hbr.org/2017/07/why-ai-cant-write-this-article-yet https://www.edx.org/course/introduction-to-artificial-intelligence-ai https://www.forbes.com/sites/quora/2017/02/24/what-companies-are-winning-the-race-for-artificial-intelligence/#2a672cf5f5cd https://www.coursera.org/courses?languages=en&query=ai https://airesources.org/ https://digitalfullpotential.com/ai-applications-market-forecasts/

Leave a Reply