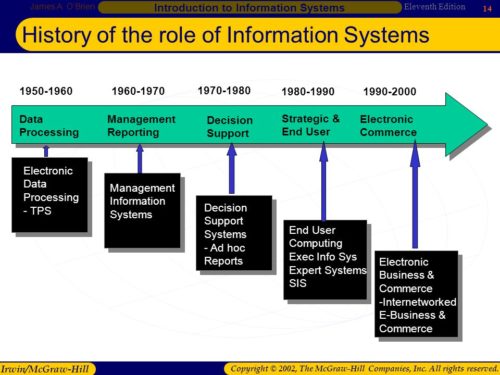

were invented over the millennia, new capabilities appeared, and people became empowered. The invention of the printing press by Johannes Gutenberg in the mid-15th century and the invention of a mechanical calculator by Blaise Pascal in the 17th century are but two examples. These inventions led to a profound revolution in the ability to record, process, disseminate, and reach for information and knowledge. This led, in turn, to even deeper changes in individual lives, business organization, and human governance.

The first large-scale mechanical information system was Herman Hollerith’s census tabulator. Invented in time to process the 1890 U.S. census, Hollerith’s machine represented a major step in automation, as well as an inspiration to develop computerized information systems.

One of the first computers used for such information processing was the UNIVACI, installed at the U.S. Bureau of the Census in 1951 for administrative use and at General Electric in 1954 for commercial use. Beginning in the late 1970s, personal computers brought some of the advantages of information systems to small businesses and to individuals. Early in the same decade the Internet began its expansion as the global network of networks. In 1991 the World Wide Web, invented by Tim Berners-Lee as a means to access the interlinked information stored in the globally dispersed computers connected by the Internet, began operation and became the principal service delivered on the network. The global penetration of the Internet and the Web has enabled access to information and other resources and facilitated the forming of relationships among people and organizations on an unprecedented scale. The progress of electronic commerce over the Internet has resulted in a dramatic growth in digital interpersonal communications (via e-mail and social networks), distribution of products (software, music, e-books, and movies), and business transactions (buying, selling, and advertising on the Web). With the worldwide spread of smartphones, tablets, laptops, and other computer-based mobile devices, all of which are connected by wireless communication networks, information systems have been extended to support mobility as the natural human condition.

As information systems enabled more diverse human activities, they exerted a profound influence over society. These systems quickened the pace of daily activities, enabled people to develop and maintain new and often more-rewarding relationships, affected the structure and mix of organizations, changed the type of products bought, and influenced the nature of work. Information and knowledge became vital economic resources. Yet, along with new opportunities, the dependence on information systems brought new threats. Intensive industry innovation and academic research continually develop new opportunities while aiming to contain the threats.

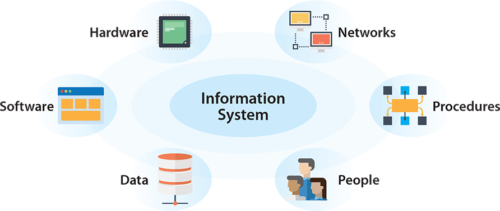

Components of Information Systems

The main components of information systems are computer hardwareand software, telecommunications, databases and data warehouses, human resources, and procedures. The hardware, software, and telecommunications constitute information technology (IT)

Computer Hardware

Today throughout the world even the smallest firms, as well as many households, own or lease computers. Individuals may own multiple computers in the form of smartphones, tablets, and other wearable devices. Large organizations typically employ distributed computer systems, from powerful parallel-processing servers located in data centres to widely dispersed personal computers and mobile devices, integrated into the organizational information systems. Sensors are becoming ever more widely distributed throughout the physical and biological environmentto gather data and, in many cases, to effect control via devices known as actuators. Together with the peripheralequipment—such as magnetic or solid-state storage disks, input-output devices, and telecommunications gear—these constitute the hardware of information systems. The cost of hardware has steadily and rapidly decreased, while processing speed and storage capacity have increased vastly. This development has been occurring under Moore’s law: the power of the microprocessorsat the heart of computing devices has been doubling approximately every 18 to 24 months. However, hardware’s use of electric powerand its environmental impact are concerns being addressed by designers. Increasingly, computer and storage services are delivered from the cloud—from shared facilities accessed over telecommunications networks.

Computer Software

Computer software falls into two broad classes: system software and application software. The principal system software is the operating system. It manages the hardware, data and program files, and other system resources and provides means for the user to control the computer, generally via a graphical user interface (GUI). Application software is programs designed to handle specific tasks for users. Smartphone apps became a common way for individuals to access information systems. Other examples include general-purpose application suites with their spreadsheet and word-processing programs, as well as “vertical” applications that serve a specific industry segment—for instance, an application that schedules, routes, and tracks package deliveries for an overnight carrier. Larger firms use licensed applications developed and maintained by specialized software companies, customizing them to meet their specific needs, and develop other applications in-house or on an outsourced basis. Companies may also use applications delivered as software-as-a-service (SaaS) from the cloud over the Web. Proprietary software, available from and supported by its vendors, is being challenged by open-source software available on the Web for free use and modification under a license that protects its future availability.

Telecommunications

Extensive networking infrastructure supports the growing move to cloud computing, with the information-system resources shared among multiple companies, leading to utilization efficiencies and freedom in localization of the data centres. Software-defined networking affords flexible control of telecommunications networks with algorithms that are responsive to real-time demands and resource availabilities.

Many information systems are primarily delivery vehicles for data stored in databases. A database is a collection of interrelated data organized so that individual records or groups of records can be retrieved to satisfy various criteria. Typical examples of databases include employee records and product catalogs. Databases support the operations and management functions of an enterprise. Data warehouses contain the archival data, collected over time, that can be mined for information in order to develop and market new products, serve the existing customers better, or reach out to potential new customers. Anyone who has ever purchased something with a credit card—in person, by mail order, or over the Web—is included within such data collections.

Massive collection and processing of the quantitative, or structured, data, as well as of the textual data often gathered on the Web, has developed into a broad initiative known as “big data.” Many benefits can arise from decisions based on the facts reflected by big data. Examples include evidence-based medicine, economy of resources as a result of avoiding waste, and recommendations of new products (such as books or movies) based on a user’s interests. Big data enables innovative business models. For example, a commercial firm collects the prices of goods by crowdsourcing (collecting from numerous independent individuals) via smartphones around the world. The aggregated data supplies early information on price movements, enabling more responsive decision making than was previously possible.

The processing of textual data—such as reviews and opinions articulated by individuals on social networks, blogs, and discussion boards—permits automated sentiment analysis for marketing, competitive intelligence, new product development, and other decision-making purposes.

Human Resources and Procedures

Procedures for using, operating, and maintaining an information system are part of its documentation. For example, procedures need to be established to run a payroll program, including when to run it, who is authorized to run it, and who has access to the output. In the autonomous computing initiative, data centres are increasingly run automatically, with the procedures embedded in the software that controls those centres.

Types of Information Systems

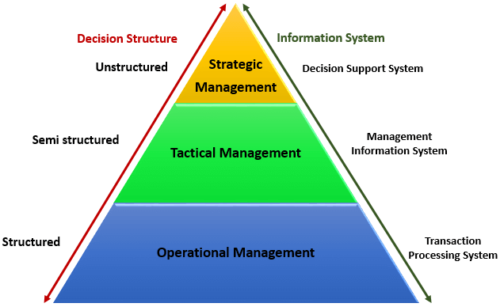

Structure of organizational information systems Information systems consist of three layers: operational support, support of knowledge work, and management support. Operational support forms the base of an information system and contains various transaction processing systems for designing, marketing, producing, and delivering products and services. Support of knowledge work forms the middle layer; it contains subsystems for sharing information within an organization. Management support, forming the top layer, contains subsystems for managing and evaluating an organization's resources and goals.

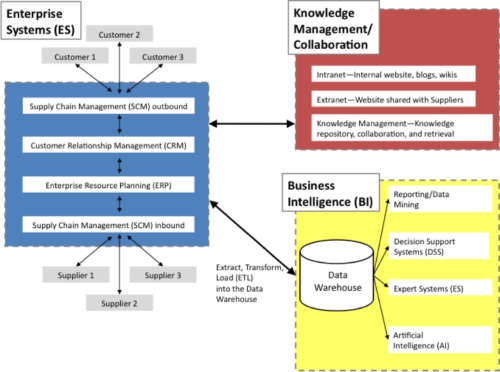

Structure of organizational information systems Information systems consist of three layers: operational support, support of knowledge work, and management support. Operational support forms the base of an information system and contains various transaction processing systems for designing, marketing, producing, and delivering products and services. Support of knowledge work forms the middle layer; it contains subsystems for sharing information within an organization. Management support, forming the top layer, contains subsystems for managing and evaluating an organization's resources and goals.Operational Support and Enterprise Systems

The third type of enterprise system, customer relationship management (CRM), supports dealing with the company’s customers in marketing, sales, service, and new product development. A CRM system gives a business a unified view of each customer and its dealings with that customer, enabling a consistent and proactive relationship. In cocreation initiatives, the customers may be involved in the development of the company’s new products.

Many transaction processing systems support electronic commerce over the Internet. Among these are systems for online shopping, banking, and securities trading. Other systems deliver information, educational services, and entertainment on demand. Yet other systems serve to support the search for products with desired attributes (for example, keyword search on search engines), price discovery (via an auction, for example), and delivery of digital products (such as software, music, movies, or greeting cards). Social network sites, such as Facebook and LinkedIn, are a powerful tool for supporting customer communities and individuals as they articulate opinions, evolve new ideas, and are exposed to promotional messages. A growing array of specialized services and information-based products are offered by various organizations on the Web, as an infrastructure for electronic commerce has emerged on a global scale. Transaction processing systems accumulate the data in databases and data warehouses that are necessary for the higher-level information systems. Enterprise systems also provide software modules needed to perform many of these higher-level functions.Support of Knowledge Work

Professional Support Systems

Collaboration Systems

Knowledge Management Systems (KMS)

Management Support

Management Reporting Systems

Information systems support all levels of management, from those in charge of short-term schedules and budgets for small work groups to those concerned with long-term plans and budgets for the entire organization. Management reporting systems provide routine, detailed, and voluminous information reports specific to each manager’s areas of responsibility. These systems are typically used by first-level supervisors. Generally, such reports focus on past and present activities, rather than projecting future performance. To prevent information overload, reports may be automatically sent only under exceptional circumstances or at the specific request of a manager.

Information systems support all levels of management, from those in charge of short-term schedules and budgets for small work groups to those concerned with long-term plans and budgets for the entire organization. Management reporting systems provide routine, detailed, and voluminous information reports specific to each manager’s areas of responsibility. These systems are typically used by first-level supervisors. Generally, such reports focus on past and present activities, rather than projecting future performance. To prevent information overload, reports may be automatically sent only under exceptional circumstances or at the specific request of a manager.Decision Support and Business Intelligence

Executive Information Systems

Acquiring Information Systems and Services

Information systems are a major corporate asset, with respect both to the benefits they provide and to their high costs. Therefore, organizations have to plan for the long term when acquiring information systems and services that will support business initiatives. At the same time, firms have to be responsive to emerging opportunities. On the basis of long-term corporate plans and the requirements of various individuals from data workers to top management, essential applications are identified and project priorities are set. For example, certain projects may have to be carried out immediately to satisfy a new government reporting regulation or to interact with a new customer’s information system. Other projects may be given a higher priority because of their strategic role or greater expected benefits. Once the need for a specific information system has been established, the system has to be acquired. This is generally done in the context of the already existing information systems architecture of the firm. The acquisition of information systems can either involve external sourcing or rely on internal development or modification. With today’s highly developed IT industry, companies tend to acquire information systems and services from specialized vendors. The principal tasks of information systems specialists involve modifying the applications for their employer’s needs and integrating the applications to create a coherent systems architecture for the firm. Generally, only smaller applications are developed internally. Certain applications of a more personal nature may be developed by the end users themselves.

Information systems are a major corporate asset, with respect both to the benefits they provide and to their high costs. Therefore, organizations have to plan for the long term when acquiring information systems and services that will support business initiatives. At the same time, firms have to be responsive to emerging opportunities. On the basis of long-term corporate plans and the requirements of various individuals from data workers to top management, essential applications are identified and project priorities are set. For example, certain projects may have to be carried out immediately to satisfy a new government reporting regulation or to interact with a new customer’s information system. Other projects may be given a higher priority because of their strategic role or greater expected benefits. Once the need for a specific information system has been established, the system has to be acquired. This is generally done in the context of the already existing information systems architecture of the firm. The acquisition of information systems can either involve external sourcing or rely on internal development or modification. With today’s highly developed IT industry, companies tend to acquire information systems and services from specialized vendors. The principal tasks of information systems specialists involve modifying the applications for their employer’s needs and integrating the applications to create a coherent systems architecture for the firm. Generally, only smaller applications are developed internally. Certain applications of a more personal nature may be developed by the end users themselves.

Acquisition from External Sources

Information Systems Development

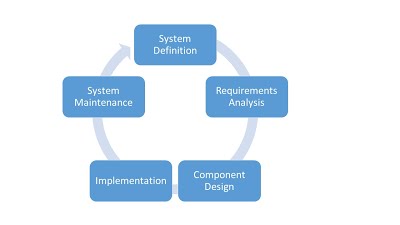

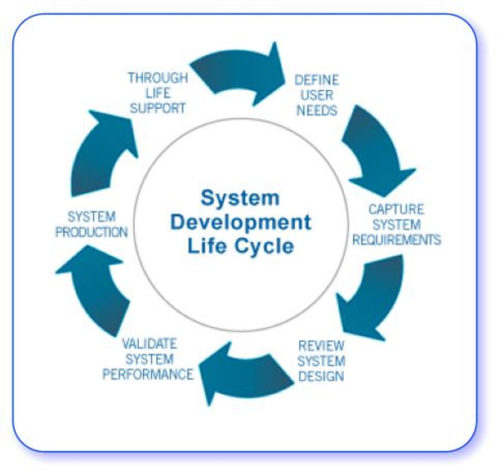

When an information system is developed internally by an organization, one of two broad methods is used: life-cycle development or rapid application development (RAD).The same methods are used by software vendors, which need to provide more general, customizable systems. Large organizational systems, such as enterprise systems, are generally developed and maintained through a systematic process, known as a system life cycle, which consists of six stages: feasibility study, system analysis, system design, programming and testing, installation, and operation and maintenance. The first five stages are system development proper, and the last stage is the long-term exploitation. Following a period of use (with maintenance as needed), the information system may be either phased out or upgraded. In the case of a major upgrade, the system enters another development life cycle.

When an information system is developed internally by an organization, one of two broad methods is used: life-cycle development or rapid application development (RAD).The same methods are used by software vendors, which need to provide more general, customizable systems. Large organizational systems, such as enterprise systems, are generally developed and maintained through a systematic process, known as a system life cycle, which consists of six stages: feasibility study, system analysis, system design, programming and testing, installation, and operation and maintenance. The first five stages are system development proper, and the last stage is the long-term exploitation. Following a period of use (with maintenance as needed), the information system may be either phased out or upgraded. In the case of a major upgrade, the system enters another development life cycle. Managing Information Systems

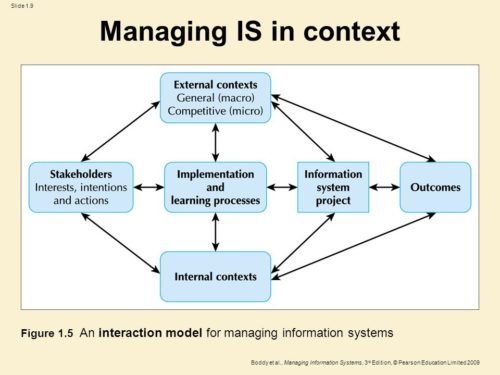

For an organization to use its information services to support its operations or to innovate by launching a new initiative, those services have to be part of a well-planned infrastructure of core resources. The specific systems ought to be configured into a coherent architecture to deliver the necessary information services. Many organizations rely on outside firms—that is, specialized IT companies—to deliver some, or even all, of their information services. If located in-house, the management of information systems can be decentralized to a certain degree to correspond to the organization’s overall structure.

For an organization to use its information services to support its operations or to innovate by launching a new initiative, those services have to be part of a well-planned infrastructure of core resources. The specific systems ought to be configured into a coherent architecture to deliver the necessary information services. Many organizations rely on outside firms—that is, specialized IT companies—to deliver some, or even all, of their information services. If located in-house, the management of information systems can be decentralized to a certain degree to correspond to the organization’s overall structure.Infrastructure and Architecture

A well-designed information system rests on a coherent foundation that supports responsive change—and, thus, the organization’s agility—as new business or administrative initiatives arise. Known as the information system infrastructure, the foundation consists of core telecommunications networks, databases and data warehouses, software, hardware, and procedures managed by various specialists. With business globalization, an organization’s infrastructure often crosses many national boundaries. Establishing and maintaining such a complex infrastructure requires extensive planning and consistent implementation to handle strategic corporate initiatives, transformations, mergers, and acquisitions. Information system infrastructure should be established in order to create meaningful options for future corporate development.When organized into a coherent whole, the specific information systems that support operations, management, and knowledge work constitute the system architecture of an organization. Clearly, an organization’s long-term general strategic plans must be considered when designing an information system infrastructure and architecture.

A well-designed information system rests on a coherent foundation that supports responsive change—and, thus, the organization’s agility—as new business or administrative initiatives arise. Known as the information system infrastructure, the foundation consists of core telecommunications networks, databases and data warehouses, software, hardware, and procedures managed by various specialists. With business globalization, an organization’s infrastructure often crosses many national boundaries. Establishing and maintaining such a complex infrastructure requires extensive planning and consistent implementation to handle strategic corporate initiatives, transformations, mergers, and acquisitions. Information system infrastructure should be established in order to create meaningful options for future corporate development.When organized into a coherent whole, the specific information systems that support operations, management, and knowledge work constitute the system architecture of an organization. Clearly, an organization’s long-term general strategic plans must be considered when designing an information system infrastructure and architecture.Security and Control

Systems Security

Information systems security is responsible for the integrity and safety of system resources and activities. Most organizations in developed countries are dependent on the secure operation of their information systems. In fact, the very fabric of societies often depends on this security. Multiple infrastructural grids—including power, water supply, and health care—rely on it. Information systems are at the heart of intensive care units and air traffic control systems. Financial institutions could not survive a total failure of their information systems for longer than a day or two. Electronic funds transfer systems (EFTS) handle immense amounts of money that exist only as electronic signals sent over the networks or as spots on storage disks. Information systems are vulnerable to a number of threats and require strict controls, such as continuing countermeasures and regular audits to ensure that the system remains secure. The first step in creating a secure information system is to identify threats. Once potential problems are known, the second step, establishing controls, can be taken. Finally, the third step consists of audits to discover any breach of security.

Information systems security is responsible for the integrity and safety of system resources and activities. Most organizations in developed countries are dependent on the secure operation of their information systems. In fact, the very fabric of societies often depends on this security. Multiple infrastructural grids—including power, water supply, and health care—rely on it. Information systems are at the heart of intensive care units and air traffic control systems. Financial institutions could not survive a total failure of their information systems for longer than a day or two. Electronic funds transfer systems (EFTS) handle immense amounts of money that exist only as electronic signals sent over the networks or as spots on storage disks. Information systems are vulnerable to a number of threats and require strict controls, such as continuing countermeasures and regular audits to ensure that the system remains secure. The first step in creating a secure information system is to identify threats. Once potential problems are known, the second step, establishing controls, can be taken. Finally, the third step consists of audits to discover any breach of security.Computer Crime and Abuse

Computer crime—illegal acts in which computers are the primary tool—costs the world economy many billions of dollars annually. Computer abuse does not rise to the level of crime, yet it involves unethical use of a computer. The objectives of the so-called hacking of information systems include vandalism, theft of consumer information, governmental and commercial espionage, sabotage, and cyberwar. Some of the more widespread means of computer crime include phishing and planting of malware, such as computer viruses and worms, Trojan horses, and logic bombs.

Computer crime—illegal acts in which computers are the primary tool—costs the world economy many billions of dollars annually. Computer abuse does not rise to the level of crime, yet it involves unethical use of a computer. The objectives of the so-called hacking of information systems include vandalism, theft of consumer information, governmental and commercial espionage, sabotage, and cyberwar. Some of the more widespread means of computer crime include phishing and planting of malware, such as computer viruses and worms, Trojan horses, and logic bombs.Once a system connected to the Internet is invaded, it may be used to take over many others and organize them into so-called botnets that can launch massive attacks against other systems to steal information or sabotage their operation. There is a growing concern that, in the “Internet of things,” computer-controlled devices such as refrigerators or TV sets may be deployed in botnets. The variety of devices makes them difficult to control against malware.

Systems Controls

Securing Information

Systems Audit

Top 10 Information System Trends for Future

Autonomous Things

Autonomous things, such as robots, drones and autonomous vehicles, use AI to automate functions previously performed by humans. Their automation goes beyond the automation provided by rigid programing models and they exploit AI to deliver advanced behaviors that interact more naturally with their surroundings and with people.

Autonomous things, such as robots, drones and autonomous vehicles, use AI to automate functions previously performed by humans. Their automation goes beyond the automation provided by rigid programing models and they exploit AI to deliver advanced behaviors that interact more naturally with their surroundings and with people.

“As autonomous things proliferate, we expect a shift from stand-alone intelligent things to a swarm of collaborative intelligent things, with multiple devices working together, either independently of people or with human input,” For example, if a drone examined a large field and found that it was ready for harvesting, it could dispatch an autonomous harvester. Or in the delivery market, the most effective solution may be to use an autonomous vehicle to move packages to the target area. Robots and drones on board the vehicle could then ensure final delivery of the package.

Augmented Analytics

Augmented analytics focuses on a specific area of augmented intelligence, using machine learning (ML) to transform how analytics content is developed, consumed and shared. Augmented analytics capabilities will advance rapidly to mainstream adoption, as a key feature of data preparation, data management, modern analytics, business process management, process mining and data science platforms. Automated insights from augmented analytics will also be embedded in enterprise applications — for example, those of the HR, finance, sales, marketing, customer service, procurement and asset management departments — to optimize the decisions and actions of all employees within their context, not just those of analysts and data scientists. Augmented analytics automates the process of data preparation, insight generation and insight visualization, eliminating the need for professional data scientists in many situations.

Augmented analytics focuses on a specific area of augmented intelligence, using machine learning (ML) to transform how analytics content is developed, consumed and shared. Augmented analytics capabilities will advance rapidly to mainstream adoption, as a key feature of data preparation, data management, modern analytics, business process management, process mining and data science platforms. Automated insights from augmented analytics will also be embedded in enterprise applications — for example, those of the HR, finance, sales, marketing, customer service, procurement and asset management departments — to optimize the decisions and actions of all employees within their context, not just those of analysts and data scientists. Augmented analytics automates the process of data preparation, insight generation and insight visualization, eliminating the need for professional data scientists in many situations.

This will lead to citizen data science, an emerging set of capabilities and practices that enables users whose main job is outside the field of statistics and analytics to extract predictive and prescriptive insights from data,” Through 2020, the number of citizen data scientists will grow five times faster than the number of expert data scientists. Organizations can use citizen data scientists to fill the data science and machine learning talent gap caused by the shortage and high cost of data scientists.

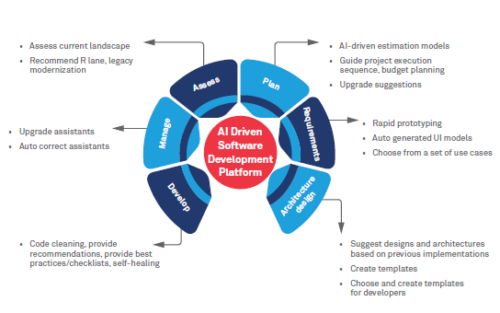

AI-Driven Development

The market is rapidly shifting from an approach in which professional data scientists must partner with application developers to create most AI-enhanced solutions to a model in which the professional developer can operate alone using predefined models delivered as a service. This provides the developer with an ecosystem of AI algorithms and models, as well as development tools tailored to integrating AI capabilities and models into a solution. Another level of opportunity for professional application development arises as AI is applied to the development process itself to automate various data science, application development and testing functions. By 2022, at least 40 percent of new application development projects will have AI co-developers on their team.

The market is rapidly shifting from an approach in which professional data scientists must partner with application developers to create most AI-enhanced solutions to a model in which the professional developer can operate alone using predefined models delivered as a service. This provides the developer with an ecosystem of AI algorithms and models, as well as development tools tailored to integrating AI capabilities and models into a solution. Another level of opportunity for professional application development arises as AI is applied to the development process itself to automate various data science, application development and testing functions. By 2022, at least 40 percent of new application development projects will have AI co-developers on their team.

Ultimately, highly advanced AI-powered development environments automating both functional and nonfunctional aspects of applications will give rise to a new age of the ‘citizen application developer’ where nonprofessionals will be able to use AI-driven tools to automatically generate new solutions. Tools that enable nonprofessionals to generate applications without coding are not new, but we expect that AI-powered systems will drive a new level of flexibility.

Digital Twins

A digital twin refers to the digital representation of a real-world entity or system. By 2020, Gartner estimates there will be more than 20 billion connected sensors and endpoints and digital twins will exist for potentially billions of things. Organizations will implement digital twinssimply at first. They will evolve them over time, improving their ability to collect and visualize the right data, apply the right analytics and rules, and respond effectively to business objectives.

A digital twin refers to the digital representation of a real-world entity or system. By 2020, Gartner estimates there will be more than 20 billion connected sensors and endpoints and digital twins will exist for potentially billions of things. Organizations will implement digital twinssimply at first. They will evolve them over time, improving their ability to collect and visualize the right data, apply the right analytics and rules, and respond effectively to business objectives.

“One aspect of the digital twin evolution that moves beyond IoT will be enterprises implementing digital twins of their organizations (DTOs). A DTO is a dynamic software model that relies on operational or other data to understand how an organization operationalizes its business model, connects with its current state, deploys resources and responds to changes to deliver expected customer value,” said Mr. Cearley. “DTOs help drive efficiencies in business processes, as well as create more flexible, dynamic and responsive processes that can potentially react to changing conditions automatically.

Empowered Edge

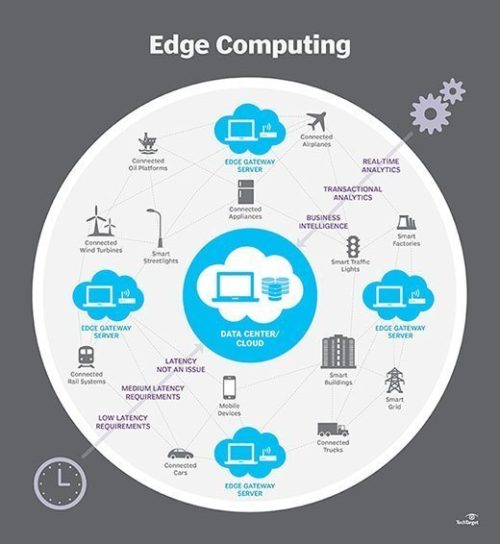

The edge refers to endpoint devices used by people or embedded in the world around us. Edge computing describes a computing topology in which information processing, and content collection and delivery, are placed closer to these endpoints. It tries to keep the traffic and processing local, with the goal being to reduce traffic and latency.

The edge refers to endpoint devices used by people or embedded in the world around us. Edge computing describes a computing topology in which information processing, and content collection and delivery, are placed closer to these endpoints. It tries to keep the traffic and processing local, with the goal being to reduce traffic and latency.

In the near term, edge is being driven by IoT and the need keep the processing close to the end rather than on a centralized cloud server. However, rather than create a new architecture, cloud computing and edge computing will evolve as complementary models with cloud services being managed as a centralized service executing, not only on centralized servers, but in distributed servers on-premises and on the edge devices themselves.

Over the next five years, specialized AI chips, along with greater processing power, storage and other advanced capabilities, will be added to a wider array of edge devices. The extreme heterogeneity of this embedded IoT world and the long life cycles of assets such as industrial systems will create significant management challenges. Longer term, as 5G matures, the expanding edge computing environment will have more robust communication back to centralized services. 5G provides lower latency, higher bandwidth, and (very importantly for edge) a dramatic increase in the number of nodes (edge endpoints) per square km.

Immersive Experience

Conversational platforms are changing the way in which people interact with the digital world. Virtual reality (VR)Augmented reality (AR) and Mixed reality (MR)are changing the way in which people perceive the digital world. This combined shift in perception and interaction models leads to the future immersive user experience.

Conversational platforms are changing the way in which people interact with the digital world. Virtual reality (VR)Augmented reality (AR) and Mixed reality (MR)are changing the way in which people perceive the digital world. This combined shift in perception and interaction models leads to the future immersive user experience.

Over time, we will shift from thinking about individual devices and fragmented user interface (UI) technologies to a multichannel and multimodal experience. The multimodal experience will connect people with the digital world across hundreds of edge devices that surround them, including traditional computing devices, wearables, automobiles, environmental sensors and consumer appliances, the multichannel experience will use all human senses as well as advanced computer senses (such as heat, humidity and radar) across these multimodal devices. This multiexperience environment will create an ambient experience in which the spaces that surround us define “the computer” rather than the individual devices. In effect, the environment is the computer.

Blockchain

Blockchain, a type of distributed ledger, promises to reshape industries by enabling trust, providing transparency and reducing friction across business ecosystems potentially lowering costs, reducing transaction settlement times and improving cash flow. Today, trust is placed in banks, clearinghouses, governments and many other institutions as central authorities with the “single version of the truth” maintained securely in their databases. The centralized trust model adds delays and friction costs (commissions, fees and the time value of money) to transactions. Blockchain provides an alternative trust mode and removes the need for central authorities in arbitrating transactions.

Blockchain, a type of distributed ledger, promises to reshape industries by enabling trust, providing transparency and reducing friction across business ecosystems potentially lowering costs, reducing transaction settlement times and improving cash flow. Today, trust is placed in banks, clearinghouses, governments and many other institutions as central authorities with the “single version of the truth” maintained securely in their databases. The centralized trust model adds delays and friction costs (commissions, fees and the time value of money) to transactions. Blockchain provides an alternative trust mode and removes the need for central authorities in arbitrating transactions.

”Current blockchain technologies and concepts are immature, poorly understood and unproven in mission-critical, at-scale business operations. This is particularly so with the complex elements that support more sophisticated scenarios,” said Mr. Cearley. “Despite the challenges, the significant potential for disruption means CIOs and IT leaders should begin evaluating blockchain, even if they don’t aggressively adopt the technologies in the next few years.”

Many blockchain initiatives today do not implement all of the attributes of blockchain — for example, a highly distributed database. These blockchain-inspired solutions are positioned as a means to achieve operational efficiency by automating business processes, or by digitizing records. They have the potential to enhance sharing of information among known entities, as well as improving opportunities for tracking and tracing physical and digital assets. However, these approaches miss the value of true blockchain disruption and may increase vendor lock-in. Organizations choosing this option should understand the limitations and be prepared to move to complete blockchain solutions over time and that the same outcomes may be achieved with more efficient and tuned use of existing nonblockchain technologies.

Smart Spaces

A smart space is a physical or digital environment in which humans and technology-enabled systems interact in increasingly open, connected, coordinated and intelligent ecosystems. Multiple elements — including people, processes, services and things — come together in a smart space to create a more immersive, interactive and automated experience for a target set of people and industry scenarios.

A smart space is a physical or digital environment in which humans and technology-enabled systems interact in increasingly open, connected, coordinated and intelligent ecosystems. Multiple elements — including people, processes, services and things — come together in a smart space to create a more immersive, interactive and automated experience for a target set of people and industry scenarios.

“This trend has been coalescing for some time around elements such as smart cities, digital workplaces, smart homes and connected factories. We believe the market is entering a period of accelerated delivery of robust smart spaces with technology becoming an integral part of our daily lives, whether as employees, customers, consumers, community members or citizens,

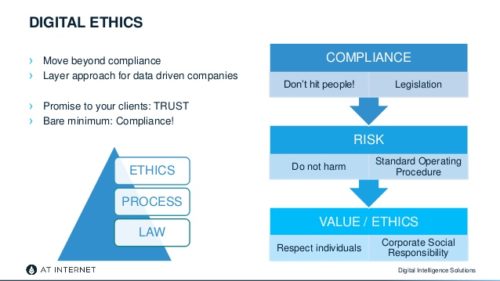

Digital Ethics and Privacy

Digital ethicsand privacy is a growing concern for individuals, organizations and governments. People are increasingly concerned about how their personal information is being used by organizations in both the public and private sector, and the backlash will only increase for organizations that are not proactively addressing these concerns.

Digital ethicsand privacy is a growing concern for individuals, organizations and governments. People are increasingly concerned about how their personal information is being used by organizations in both the public and private sector, and the backlash will only increase for organizations that are not proactively addressing these concerns.

“Any discussion on privacy must be grounded in the broader topic of digital ethicsand the trust of your customers, constituents and employees. While privacy and security are foundational components in building trust, trust is actually about more than just these components, Trust is the acceptance of the truth of a statement without evidence or investigation. Ultimately an organization’s position on privacy must be driven by its broader position on ethics and trust. Shifting from privacy to ethics moves the conversation beyond ‘are we compliant’ toward ‘are we doing the right thing.

Quantum Computing

Quantum computing (QC) is a type of nonclassical computing that operates on the quantum state of subatomic particles (for example, electrons and ions) that represent information as elements denoted as quantum bits (qubits). The parallel execution and exponential scalability of quantum computers means they excel with problems too complex for a traditional approach or where a traditional algorithms would take too long to find a solution. Industries such as automotive, financial, insurance, pharmaceuticals, military and research organizations have the most to gain from the advancements in QC. In the pharmaceutical industry, for example, QC could be used to model molecular interactions at atomic levels to accelerate time to market for new cancer-treating drugs or QC could accelerate and more accurately predict the interaction of proteins leading to new pharmaceutical methodologies.

Quantum computing (QC) is a type of nonclassical computing that operates on the quantum state of subatomic particles (for example, electrons and ions) that represent information as elements denoted as quantum bits (qubits). The parallel execution and exponential scalability of quantum computers means they excel with problems too complex for a traditional approach or where a traditional algorithms would take too long to find a solution. Industries such as automotive, financial, insurance, pharmaceuticals, military and research organizations have the most to gain from the advancements in QC. In the pharmaceutical industry, for example, QC could be used to model molecular interactions at atomic levels to accelerate time to market for new cancer-treating drugs or QC could accelerate and more accurately predict the interaction of proteins leading to new pharmaceutical methodologies.

CIOs and IT leaders should start planning for QC by increasing understanding and how it can apply to real-world business problems. Learn while the technology is still in the emerging state. Identify real-world problems where QC has potential and consider the possible impact on security, But don’t believe the hype that it will revolutionize things in the next few years. Most organizations should learn about and monitor QC through 2022 and perhaps exploit it from 2023 or 2025.

Leave a Reply