About ARC - Advanced Research Computing

"Information is being generated from every imaginable activity and comes at us constantly from all corners of the earth."

As the move to open data accelerates and research data grows exponentially, so does the need to move quickly with sophisticated data management plans, curated to enable data access while protecting privacy and security.

While collecting data is important, understanding its significance and how to use it is where discoveries lie. Big data is data that is too complex to be processed by standard computers. For example the desktop may not have enough memory or may take too long. That is where advanced research computing comes in.

Advanced research computing includes access to High Performance Computing (supercomputers), cloud data storage and management, networking and visualization to help solve complex problems.

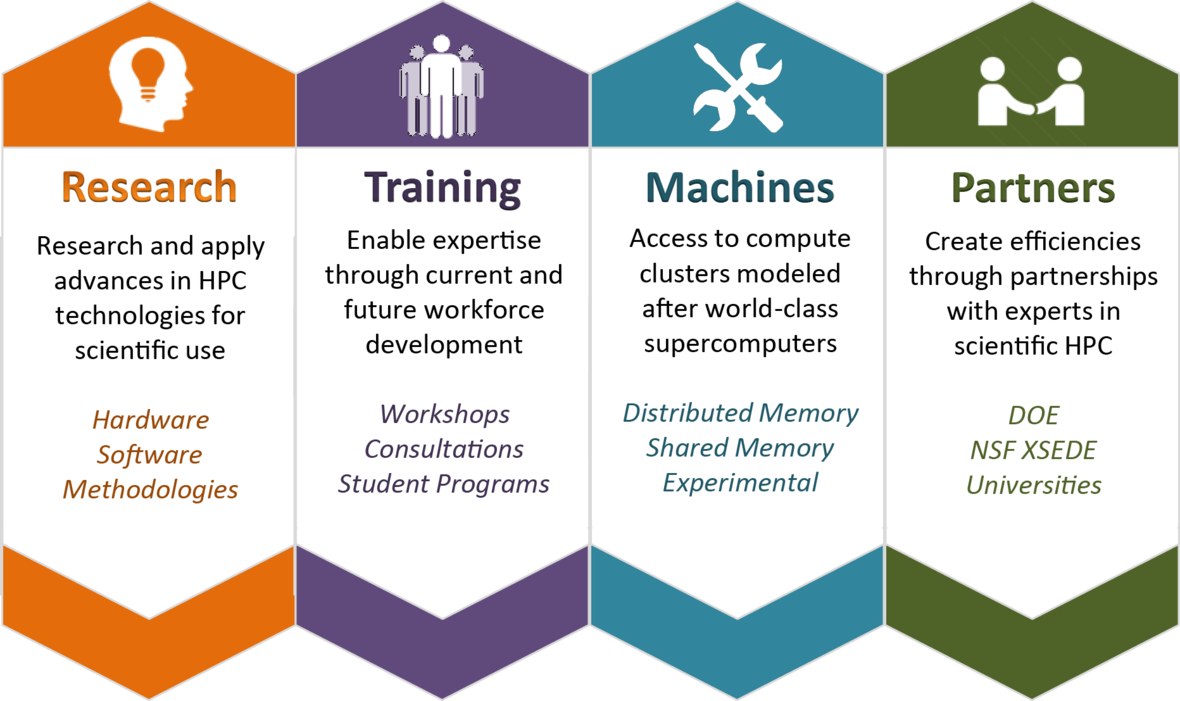

But advanced computing offers much more than just hardware. Users also have access to the highly trained scientific and technical people who support the systems that are critical for researchers.

ARC - Advanced Research Computing: Critical Elements

- High Performance Computing (Super-computers)

- Cloud Data Storage and Management

- Consulting and Training

- Research - New Technologies and Computing Tools

- Partnerships

Interpreting big data using advanced computing capabilities is transforming how we conduct research, make products and deliver services. It involves gathering, processing and disseminating massive amounts of data – impossible to do in the past – and translating it into usable information. For example advanced research computing can help researchers determine a person’s risk for developing certain diseases, improve healthcare outcomes for premature babies and boost farm operations to create positive environmental impacts.

High Performance Computing (Super-computers)

High Performance Computing or super-computing, uses the largest computers available to tackle the biggest problems facing science, society or industry. High Performance Computing typically involves computationally intense workloads, such as large and complex simulation models or extremely large data sets.

Super computers help power applications that includes global climate simulations, astrophysics and astronomy, physics, chemistry, material science, engineering, biomedical sciences and genomics. Economists studying the stock market model the global effects of capital flow. Atmospheric scientists analyze global weather patterns. Psychologists model the brain and human memory. Bio-technologists and computational chemists design complex molecules for innovative new drugs. Engineers model blood flow in artificial hearts.

This all represents complex research that requires the analysis of petabytes of data and intricate mathematical calculations that would take years to perform on even the most sophisticated desktop computer. Using High Performance Computing systems, researchers can do these calculations in weeks, days or even hours.

Issues or Challenges

Although HPC presents many opportunities for research and advancement in a wide range of applications, it also presents significant challenges that must be addressed. Security is a key component for all computing techniques as our life is more and more intertwined with information processing. Some of the traditional security techniques are not effective because they cannot keep up with the system. There needs to be balance between security and convenience. Due to the convenience factor intrusion prevention is little bit harder on HPC systems and they are more vulnerable.

Performance, however, is always of primary concern in HPC. By definition, HPC systems are optimized for high performance and performance is defined as speed. To achieve higher performance, microprocessors vendors have increased the power and number of transistors every 18-24 months. As a result, keeping a large HPC system working properly requires continuous cooling of a large computer room, creating a large operational cost.

Power awareness is an important issue in HPC. Ignoring power consumption can lead to high operational cost, which can impact reliability and productivity. Modern parallel supercomputers are dominated by power hungry commodity machines that are capable of performing billions of operations per second. Breaking the exascale barrier requires overcoming several challenges related to energy costs, memory costs, communications costs, etc.

While computer performance has improved dramatically, real productivity (defined in terms of achieving mission goals) with these ever-faster machines has not kept pace. Indeed, researches are finding it increasingly costly and time-consuming to update their software to take advantage of the new hardware.

The energy efficiency of applications running on HPC platforms is hard to improve due to the lack of systems and tools that can provide accurate power consumption data of all major components including CPUs, DRAMs, disks, accelerators and coprocessors. Minimizing energy consumption of HPC requires novel energy-conscious technologies at multiple layers.

The adoption of cloud computing in HPC has become appealing within the enterprise and service providers. HPC clouds expand the application user base. But with cloud being an additional deployment option, HPC users have to deal with heterogeneous resources.

[caption id="attachment_5364" align="alignright" width="350"] HPC Architecture[/caption]

Cloud Data Storage and Management

HPC Architecture[/caption]

Cloud Data Storage and Management

Cloud storage is any file or data storage that exists on a computer besides your own. Cloud storage from the Advanced Computing Group is hosted on our own storage servers. Storage with the Advanced Computing group is fast, networking is reliable and depends on quality of equipment and dedicated staff.

Cloud Computing with its recent and rapid expansions and development have grabbed the attention of HPC users and developers in recent years. Cloud Computing attempts to provide HPC-as-a-Service exactly like other forms of services currently available in the Cloud such as Software-as-a-Service, Platform-as-a-Service, and Infrastructure-as-a-Service. HPC users may benefit from the Cloud in different angles such as scalability, resources being on-demand, fast, and inexpensive. On the other hand, moving HPC applications have a set of challenges too. Good examples of such challenges are virtualization overhead in the Cloud, multi-tenancy of resources, and network latency issues. Much research is currently being done to overcome these challenges and make HPC in the cloud a more realistic possibility.

In 2016 Penguin Computing, R-HPC, Amazon Web Services, Univa, Silicon Graphics International, Sabalcore, and Gomput started to offer HPC cloud computing. The Penguin On Demand (POD) cloud is a bare-metal compute model to execute code, but each user is given virtualized login node. POD computing nodes are connected via nonvirtualized 10 Gbit/s Ethernet or QDR InfiniBand networks. User connectivity to the POD data center ranges from 50 Mbit/s to 1 Gbit/s. Citing Amazon's EC2 Elastic Compute Cloud, Penguin Computing argues that virtualization of compute nodes is not suitable for HPC. Penguin Computing has also criticized that HPC clouds may allocated computing nodes to customers that are far apart, causing latency that impairs performance for some HPC applications.

Future of Advanced Research Computing

Advanced Research Computing or High-Performance Computing (HPC) is used to address and solve the world’s most complex computational problems. For decades, HPC has established itself as an essential tool for discoveries, innovations and new insights in science, research and development, engineering and business across a wide range of application areas in academia and industry. It has become an integral part of the scientific method – the third leg along with theory and experiment.

Today, High-Performance Computing is also well recognized to be of strategic and economic value – HPC matters and is transforming industries. High Performance Computing enables scientists and engineers to solve complex and large science, engineering, and business problems using advanced algorithms and applications that require very high compute capabilities, fast memory and storage, high bandwidth and low latency throughput, high fidelity visualization, and enhanced networking.

Today, the IT industry is being transformed by cloud, big data, social media, artificial intelligence, and “Internet of Things” technologies and business models. All of these trends require advanced computational simulation models and powerful highly scalable systems. Hence, sophisticated HPC capabilities are critical to the organizations and companies that want to establish and enhance leadership positions in their respective areas.

Some industry verticals and application areas where Advanced Research Computing is going to be used are as follows

- Manufacturing, Automotive & Computer Aided Engineering (CAE) - Investments in Advanced Computing Resources is expected to play a pivotal role for Advanced Manufacturing, this includes areas developing the innovation’s infrastructure, providing the backbone for commercial and 3D manufacturing. The range of automotive technologies identified – includes batteries; algorithms and machine learning; quantum security of the Internet; and robotics and autonomous systems – all the above areas are generating (individually and in combination) opportunities to create applications for businesses, governments and individuals.

These digital technologies sense, detect and measure what is happening and use the data this generates to produce insights and drive changes. These technologies have real potential to improve productivity. Key opportunities include:

• Connected factories • Connected supply chains • Virtual reality and augmented reality.

- Aerospace Industry - Enabling a Competitive Aerospace Industry, Scientists and Researchers into Advanced Research Computing are working developing new advanced computer algorithms to study the combustion of conventional and alternative fuels in practical devices. Current techniques and solution algorithms lack the detail of modeling needed to design the next generation of quiet, high-efficiency, low-emission combustors. Scientists are finding remedy to this situation by applying new and innovative mathematical models and computational tools to improve the understanding of combustion phenomena.

- Weather Forecast and Climate Research - Providing high-performance computing (HPC) capabilities & expertise to scientists for the acceleration and expansion of scientific discovery in the areas of Climate Change and Advanced Disaster Management. ARC projects enable scientists take advantage of Super Computing in saving time and becoming more efficient.

- Energy, Oil & Gas Industry, Geophysics - HPC plays a major role in data processing and interpretation of oil and gas geophysical exploration, which is a science that examines the underground geological distribution of minerals based on differences in the physical properties. The main computing task in the oil and gas industry modeling is processing that involves solving the data-intensive wave equations.

- Life-Science and Bioinformatics (Genomics) - The computational power, memory, and storage capabilities offered by HPC resources are developed for Advanced Neural Circuit Simulations. Performing even standard analysis on massive neurophysiology data-sets will benefit from HPC systems. Harnessing the power of HPC resources to address “Grand Challenge Problems” will be achieved through effective collaboration between computational neuro scientists and computer scientists.

- Universities (Academics) - Machine Learning, Deep Learning, Artificial Intelligence (AI), Neural Networks

Other Areas where Advanced Research Computing is being effective pursued to solve future problems. Applications are becoming increasingly complex providing many opportunities to apply HPC in new ways.

- Astrophysics, High-Energy Physics, Computational Chemistry, Material Science

- Financial Services Industry(FSI)

- Digital Content Creation (DCC)

- Defense

- Security and Intelligence

Sources / References: https://www.networkworld.com/article/3236875/embargo-10-of-the-worlds-fastest-supercomputers.html https://www.usgs.gov/core-science-systems/sas/arc/science-success-stories

Leave a Reply